pandas Guide#

This is an elegant-design introduction to pandas, geared mainly for new users. You can see more complex recipes at pandas. Note that this introduction refers to the official documentation, so pandas has copyright to part of the content. The author enhances by adding more detailed examples and explanations for easier comprehension.

Customarily, we import as follows:

In [1]: import numpy as np

In [2]: import pandas as pd

In [3]: import seaborn as sns

Object creation#

Creating a Series by passing a list of values, letting pandas create a default integer index:

In [4]: s = pd.Series([1, 3, 5, np.nan, 6, 8]); s

Out[4]:

0 1.0

1 3.0

2 5.0

3 NaN

4 6.0

5 8.0

dtype: float64

Creating a DataFrame by passing a Numpy array, with a datetime index and labeled columns:

In [5]: dates = pd.date_range("20210801", periods=6)

In [6]: dates

Out[6]:

DatetimeIndex(['2021-08-01', '2021-08-02', '2021-08-03', '2021-08-04',

'2021-08-05', '2021-08-06'],

dtype='datetime64[ns]', freq='D')

In [7]: df = pd.DataFrame(np.random.randn(6, 4), index=dates, columns=list("ABCD"))

In [8]: df

Out[8]:

A B C D

2021-08-01 0.217120 0.353121 -0.551206 0.840941

2021-08-02 0.151220 1.155111 0.077040 -0.548415

2021-08-03 -0.239416 0.997688 -1.006559 0.673164

2021-08-04 -0.640489 0.219262 0.606844 -0.652033

2021-08-05 -0.177799 0.023023 1.535067 -0.051603

2021-08-06 -0.070985 0.068657 -0.068792 -1.131507

Creating a DataFrame by passing a dict of objects that can be converted to series-like:

In [9]: df2 = pd.DataFrame(

...: {

...: "A": 4.0,

...: "B": pd.Timestamp("20210801"),

...: "C": pd.Series(1, index=list(range(4)), dtype="float64"),

...: "D": np.array([3] * 4, dtype="int64"),

...: "E": pd.Categorical(["test", "train", "test", "train"]),

...: "F": "cat",

...: }

...: )

...:

In [10]: df2

Out[10]:

A B C D E F

0 4.0 2021-08-01 1.0 3 test cat

1 4.0 2021-08-01 1.0 3 train cat

2 4.0 2021-08-01 1.0 3 test cat

3 4.0 2021-08-01 1.0 3 train cat

The columns of the resulting DataFrame have different dtypes:

In [11]: df2.dtypes

Out[11]:

A float64

B datetime64[ns]

C float64

D int64

E category

F object

dtype: object

or the data type for a specific column:

In [12]: df2.A.dtype

Out[12]: dtype('float64')

If you’re using IPython, tab completion for public attributes is automatically enabled. Here’s a subset of the attributes that will be completed:

In [13]: df2.<TAB> # noqa: E225, E999

df2.A df2.bool

df2.abs df2.boxplot

df2.add df2.C

df2.add_prefix df2.clip

df2.add_suffix df2.columns

df2.align df2.copy

df2.all df2.count

df2.any df2.combine

df2.append df2.D

df2.apply df2.describe

df2.applymap df2.diff

df2.B df2.duplicated

Viewing data#

Here is how to view the top and bottom rows of the frame:

In [14]: df.head()

Out[14]:

A B C D

2021-08-01 0.217120 0.353121 -0.551206 0.840941

2021-08-02 0.151220 1.155111 0.077040 -0.548415

2021-08-03 -0.239416 0.997688 -1.006559 0.673164

2021-08-04 -0.640489 0.219262 0.606844 -0.652033

2021-08-05 -0.177799 0.023023 1.535067 -0.051603

In [15]: df.tail(3)

Out[15]:

A B C D

2021-08-04 -0.640489 0.219262 0.606844 -0.652033

2021-08-05 -0.177799 0.023023 1.535067 -0.051603

2021-08-06 -0.070985 0.068657 -0.068792 -1.131507

Display the index, columns:

In [16]: df.index

Out[16]:

DatetimeIndex(['2021-08-01', '2021-08-02', '2021-08-03', '2021-08-04',

'2021-08-05', '2021-08-06'],

dtype='datetime64[ns]', freq='D')

In [17]: df.columns

Out[17]: Index(['A', 'B', 'C', 'D'], dtype='object')

Display the shape, dimension:

In [18]: df.shape

Out[18]: (6, 4)

In [19]: df.ndim

Out[19]: 2

Note

DataFrame.to_numpy gives a Numpy representation of the underlying data.Note that this can be an expensive operation when your DataFrame has columns with different data types, which comes down to a fundamental difference between pandas and Numpy: Numpy arrays have one dtype for the entire array, while pandas DataFrames have one dtype per column. When you call DataFrame.to_numpy, pandas will find the Numpy dtype that can hold all of the dtypes in the DataFrame. This may end up being object, which requires casting every value to a Python object.

For df, our DataFrame of all floating-point values, DataFrame.to_numpy is fast and doesn’t require copying data.

In [20]: df.to_numpy()

Out[20]:

array([[ 0.2171, 0.3531, -0.5512, 0.8409],

[ 0.1512, 1.1551, 0.077 , -0.5484],

[-0.2394, 0.9977, -1.0066, 0.6732],

[-0.6405, 0.2193, 0.6068, -0.652 ],

[-0.1778, 0.023 , 1.5351, -0.0516],

[-0.071 , 0.0687, -0.0688, -1.1315]])

For df2, the DataFrame with multiple dtypes, DataFrame.to_numpy is relatively expensive.

In [21]: df2.to_numpy()

Out[21]:

array([[4.0, Timestamp('2021-08-01 00:00:00'), 1.0, 3, 'test', 'cat'],

[4.0, Timestamp('2021-08-01 00:00:00'), 1.0, 3, 'train', 'cat'],

[4.0, Timestamp('2021-08-01 00:00:00'), 1.0, 3, 'test', 'cat'],

[4.0, Timestamp('2021-08-01 00:00:00'), 1.0, 3, 'train', 'cat']],

dtype=object)

Note

DataFrame.to_numpy does not include the index or column labels in the output.

DataFrame.describe shows a quick statistic summary of your data:

In [22]: df.describe()

Out[22]:

A B C D

count 6.000000 6.000000 6.000000 6.000000

mean -0.126725 0.469477 0.098732 -0.144909

std 0.309144 0.486873 0.893740 0.780156

min -0.640489 0.023023 -1.006559 -1.131507

25% -0.224012 0.106308 -0.430602 -0.626129

50% -0.124392 0.286192 0.004124 -0.300009

75% 0.095669 0.836547 0.474393 0.491972

max 0.217120 1.155111 1.535067 0.840941

DateFrame.info show information about dataset

In [23]: df.info()

<class 'pandas.core.frame.DataFrame'>

DatetimeIndex: 6 entries, 2021-08-01 to 2021-08-06

Freq: D

Data columns (total 4 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 A 6 non-null float64

1 B 6 non-null float64

2 C 6 non-null float64

3 D 6 non-null float64

dtypes: float64(4)

memory usage: 240.0 bytes

Locate and aggregate NaN values column-wise:

In [24]: df.isnull().sum().sort_values(ascending=False)

Out[24]:

A 0

B 0

C 0

D 0

dtype: int64

Find the total number of NaN values

In [25]: df.isna().sum().sum() # identical to isnull() here

Out[25]: 0

Transposing your data:

In [26]: df.T

Out[26]:

2021-08-01 2021-08-02 2021-08-03 2021-08-04 2021-08-05 2021-08-06

A 0.217120 0.151220 -0.239416 -0.640489 -0.177799 -0.070985

B 0.353121 1.155111 0.997688 0.219262 0.023023 0.068657

C -0.551206 0.077040 -1.006559 0.606844 1.535067 -0.068792

D 0.840941 -0.548415 0.673164 -0.652033 -0.051603 -1.131507

Sorting by an axis:

In [27]: df.sort_index(axis=1, ascending=False)

Out[27]:

D C B A

2021-08-01 0.840941 -0.551206 0.353121 0.217120

2021-08-02 -0.548415 0.077040 1.155111 0.151220

2021-08-03 0.673164 -1.006559 0.997688 -0.239416

2021-08-04 -0.652033 0.606844 0.219262 -0.640489

2021-08-05 -0.051603 1.535067 0.023023 -0.177799

2021-08-06 -1.131507 -0.068792 0.068657 -0.070985

Sorting by values:

In [28]: df.sort_values(by="B")

Out[28]:

A B C D

2021-08-05 -0.177799 0.023023 1.535067 -0.051603

2021-08-06 -0.070985 0.068657 -0.068792 -1.131507

2021-08-04 -0.640489 0.219262 0.606844 -0.652033

2021-08-01 0.217120 0.353121 -0.551206 0.840941

2021-08-03 -0.239416 0.997688 -1.006559 0.673164

2021-08-02 0.151220 1.155111 0.077040 -0.548415

Selection#

Note

While standard Python / Numpy expressions for selecting and setting are intuitive and come in handy for interactive work, for production code, we recommend the optimized pandas data access methods, .at, .iat, .loc and .iloc.

Getting#

Selecting a single column, which yields a Series, equivalent to df.A:

In [29]: df["A"]

Out[29]:

2021-08-01 0.217120

2021-08-02 0.151220

2021-08-03 -0.239416

2021-08-04 -0.640489

2021-08-05 -0.177799

2021-08-06 -0.070985

Freq: D, Name: A, dtype: float64

Selecting via [], which slices the rows.

In [30]: df[0:3]

Out[30]:

A B C D

2021-08-01 0.217120 0.353121 -0.551206 0.840941

2021-08-02 0.151220 1.155111 0.077040 -0.548415

2021-08-03 -0.239416 0.997688 -1.006559 0.673164

In [31]: df["20210802":"20210804"]

Out[31]:

A B C D

2021-08-02 0.151220 1.155111 0.077040 -0.548415

2021-08-03 -0.239416 0.997688 -1.006559 0.673164

2021-08-04 -0.640489 0.219262 0.606844 -0.652033

Selection by label#

For getting a cross section using a label:

In [32]: df.loc[dates[0]]

Out[32]:

A 0.217120

B 0.353121

C -0.551206

D 0.840941

Name: 2021-08-01 00:00:00, dtype: float64

Selecting on a multi-axis by label:

In [33]: df.loc[:, ["A", "B"]]

Out[33]:

A B

2021-08-01 0.217120 0.353121

2021-08-02 0.151220 1.155111

2021-08-03 -0.239416 0.997688

2021-08-04 -0.640489 0.219262

2021-08-05 -0.177799 0.023023

2021-08-06 -0.070985 0.068657

Showing label slicing, both endpoints are included:

In [34]: df.loc["20210802":"20210804", ["A", "B"]]

Out[34]:

A B

2021-08-02 0.151220 1.155111

2021-08-03 -0.239416 0.997688

2021-08-04 -0.640489 0.219262

Reduction in the dimensions of the returned object:

In [35]: df.loc["20210802", ["A", "B"]]

Out[35]:

A 0.151220

B 1.155111

Name: 2021-08-02 00:00:00, dtype: float64

For getting a scalar value:

In [36]: df.loc[dates[0], "A"]

Out[36]: 0.21712016704323

For getting fast access to a scalar (equivalent to the prior method):

In [37]: df.at[dates[0], "A"]

Out[37]: 0.21712016704323

Selection by position#

Select via the position of the passed integers:

In [38]: df.iloc[3]

Out[38]:

A -0.640489

B 0.219262

C 0.606844

D -0.652033

Name: 2021-08-04 00:00:00, dtype: float64

By integer slices, acting similar to Numpy/Python:

In [39]: df.iloc[3:5, 0:2]

Out[39]:

A B

2021-08-04 -0.640489 0.219262

2021-08-05 -0.177799 0.023023

By lists of integer position locations, similar to the Numpy/Python style:

In [40]: df.iloc[[1, 2, 4], [0, 2]]

Out[40]:

A C

2021-08-02 0.151220 0.077040

2021-08-03 -0.239416 -1.006559

2021-08-05 -0.177799 1.535067

For slicing rows explicitly:

In [41]: df.iloc[1:3, :]

Out[41]:

A B C D

2021-08-02 0.151220 1.155111 0.077040 -0.548415

2021-08-03 -0.239416 0.997688 -1.006559 0.673164

For slicing columns explicitly:

In [42]: df.iloc[:, 1:3]

Out[42]:

B C

2021-08-01 0.353121 -0.551206

2021-08-02 1.155111 0.077040

2021-08-03 0.997688 -1.006559

2021-08-04 0.219262 0.606844

2021-08-05 0.023023 1.535067

2021-08-06 0.068657 -0.068792

For getting a value explicitly:

In [43]: df.iloc[1, 1]

Out[43]: 1.1551105853091492

For getting fast access to a scalar (equivalent to the prior method):

In [44]: df.iat[1, 1]

Out[44]: 1.1551105853091492

Selection by dtype#

In [45]: titanic = sns.load_dataset('titanic')

In [46]: titanic.head()

Out[46]:

survived pclass sex age sibsp parch fare embarked class who adult_male deck embark_town alive alone

0 0 3 male 22.0 1 0 7.2500 S Third man True NaN Southampton no False

1 1 1 female 38.0 1 0 71.2833 C First woman False C Cherbourg yes False

2 1 3 female 26.0 0 0 7.9250 S Third woman False NaN Southampton yes True

3 1 1 female 35.0 1 0 53.1000 S First woman False C Southampton yes False

4 0 3 male 35.0 0 0 8.0500 S Third man True NaN Southampton no True

In [47]: titanic.select_dtypes(include=['datetime', 'number']).head()

Out[47]:

survived pclass age sibsp parch fare

0 0 3 22.0 1 0 7.2500

1 1 1 38.0 1 0 71.2833

2 1 3 26.0 0 0 7.9250

3 1 1 35.0 1 0 53.1000

4 0 3 35.0 0 0 8.0500

In [48]: titanic.select_dtypes(exclude=['object', 'double']).head()

Out[48]:

survived pclass sibsp parch class adult_male deck alone

0 0 3 1 0 Third True NaN False

1 1 1 1 0 First False C False

2 1 3 0 0 Third False NaN True

3 1 1 1 0 First False C False

4 0 3 0 0 Third True NaN True

Boolean indexing#

Using a single column’s values to select data.

In [49]: df[df["A"] > 0]

Out[49]:

A B C D

2021-08-01 0.21712 0.353121 -0.551206 0.840941

2021-08-02 0.15122 1.155111 0.077040 -0.548415

Selecting values from a DataFrame where a boolean condition is met.

In [50]: df[df > 0]

Out[50]:

A B C D

2021-08-01 0.21712 0.353121 NaN 0.840941

2021-08-02 0.15122 1.155111 0.077040 NaN

2021-08-03 NaN 0.997688 NaN 0.673164

2021-08-04 NaN 0.219262 0.606844 NaN

2021-08-05 NaN 0.023023 1.535067 NaN

2021-08-06 NaN 0.068657 NaN NaN

Using the Series.isin method for filtering:

In [51]: df2[df2["E"].isin(["test"])]

Out[51]:

A B C D E F

0 4.0 2021-08-01 1.0 3 test cat

2 4.0 2021-08-01 1.0 3 test cat

Setting#

Setting a new column automatically aligns the data by the indexes.

In [52]: s1 = pd.Series(np.arange(6)+1, index=pd.date_range("20210801", periods=6))

In [53]: s1

Out[53]:

2021-08-01 1

2021-08-02 2

2021-08-03 3

2021-08-04 4

2021-08-05 5

2021-08-06 6

Freq: D, dtype: int64

In [54]: df["F"] = s1

Setting values by label:

In [55]: df.at[dates[0], "A"] = 0

Setting values by position:

In [56]: df.iat[0, 1] = 0

Setting by assigning with a Numpy array:

In [57]: df["D"] = np.array([6] * len(df))

The result of the prior setting operations.

In [58]: df

Out[58]:

A B C D F

2021-08-01 0.000000 0.000000 -0.551206 6 1

2021-08-02 0.151220 1.155111 0.077040 6 2

2021-08-03 -0.239416 0.997688 -1.006559 6 3

2021-08-04 -0.640489 0.219262 0.606844 6 4

2021-08-05 -0.177799 0.023023 1.535067 6 5

2021-08-06 -0.070985 0.068657 -0.068792 6 6

A where operation with setting.

In [59]: df2 = df.copy()

In [60]: df2[df2 > 0] = -df2

In [61]: df2

Out[61]:

A B C D F

2021-08-01 0.000000 0.000000 -0.551206 -6 -1

2021-08-02 -0.151220 -1.155111 -0.077040 -6 -2

2021-08-03 -0.239416 -0.997688 -1.006559 -6 -3

2021-08-04 -0.640489 -0.219262 -0.606844 -6 -4

2021-08-05 -0.177799 -0.023023 -1.535067 -6 -5

2021-08-06 -0.070985 -0.068657 -0.068792 -6 -6

Missing data#

pandas primarily uses the value np.nan to represent missing data. It is by default not included in computations.

Reindexing allows you to change/add/delete the index on a specified axis. This returns a copy of the data.

In [62]: df1 = df.reindex(index=dates[0:4], columns=list(df.columns) + ["E"])

In [63]: df1

Out[63]:

A B C D F E

2021-08-01 0.000000 0.000000 -0.551206 6 1 NaN

2021-08-02 0.151220 1.155111 0.077040 6 2 NaN

2021-08-03 -0.239416 0.997688 -1.006559 6 3 NaN

2021-08-04 -0.640489 0.219262 0.606844 6 4 NaN

In [64]: df1.loc[dates[0] : dates[1], "E"] = 1

In [65]: df1

Out[65]:

A B C D F E

2021-08-01 0.000000 0.000000 -0.551206 6 1 1.0

2021-08-02 0.151220 1.155111 0.077040 6 2 1.0

2021-08-03 -0.239416 0.997688 -1.006559 6 3 NaN

2021-08-04 -0.640489 0.219262 0.606844 6 4 NaN

Drop any rows that have missing data.

In [66]: df1.dropna(how="any")

Out[66]:

A B C D F E

2021-08-01 0.00000 0.000000 -0.551206 6 1 1.0

2021-08-02 0.15122 1.155111 0.077040 6 2 1.0

Drop any columns that exceed certain threshold。

In [67]: df1.dropna(thresh=len(df)*0.9, axis=1)

Out[67]:

Empty DataFrame

Columns: []

Index: [2021-08-01 00:00:00, 2021-08-02 00:00:00, 2021-08-03 00:00:00, 2021-08-04 00:00:00]

Get the boolean mask where values are nan.

In [68]: pd.isna(df1)

Out[68]:

A B C D F E

2021-08-01 False False False False False False

2021-08-02 False False False False False False

2021-08-03 False False False False False True

2021-08-04 False False False False False True

Replace NaN in a column with a value.

In [69]: df1.E.replace(np.nan, 6.0, inplace=True)

In [70]: df1

Out[70]:

A B C D F E

2021-08-01 0.000000 0.000000 -0.551206 6 1 1.0

2021-08-02 0.151220 1.155111 0.077040 6 2 1.0

2021-08-03 -0.239416 0.997688 -1.006559 6 3 6.0

2021-08-04 -0.640489 0.219262 0.606844 6 4 6.0

Filling missing data with closest previous valid value with preceding direction

In [71]: df1.fillna(axis=0, method='ffill', inplace=True) # up

In [72]: df1.fillna(axis=1, method='ffill') # left

Out[72]:

A B C D F E

2021-08-01 0.000000 0.000000 -0.551206 6.0 1.0 1.0

2021-08-02 0.151220 1.155111 0.077040 6.0 2.0 1.0

2021-08-03 -0.239416 0.997688 -1.006559 6.0 3.0 6.0

2021-08-04 -0.640489 0.219262 0.606844 6.0 4.0 6.0

In [73]: df1.fillna(axis=0, method='bfill') # down

Out[73]:

A B C D F E

2021-08-01 0.000000 0.000000 -0.551206 6 1 1.0

2021-08-02 0.151220 1.155111 0.077040 6 2 1.0

2021-08-03 -0.239416 0.997688 -1.006559 6 3 6.0

2021-08-04 -0.640489 0.219262 0.606844 6 4 6.0

In [74]: df1.fillna(axis=1, method='bfill') # right

Out[74]:

A B C D F E

2021-08-01 0.000000 0.000000 -0.551206 6.0 1.0 1.0

2021-08-02 0.151220 1.155111 0.077040 6.0 2.0 1.0

2021-08-03 -0.239416 0.997688 -1.006559 6.0 3.0 6.0

2021-08-04 -0.640489 0.219262 0.606844 6.0 4.0 6.0

Drop duplicate values in a column.

In [75]: df1.E.drop_duplicates(keep="last")

Out[75]:

2021-08-02 1.0

2021-08-04 6.0

Name: E, dtype: float64

Drop rows which contains a value.

In [76]: df1[((df1 != 2) & (df1 != 3)).all(axis=1)]

Out[76]:

A B C D F E

2021-08-01 0.000000 0.000000 -0.551206 6 1 1.0

2021-08-04 -0.640489 0.219262 0.606844 6 4 6.0

Change certain column names.

In [77]: df1.rename(columns={"F":"Rank", "E":"Variance"})

Out[77]:

A B C D Rank Variance

2021-08-01 0.000000 0.000000 -0.551206 6 1 1.0

2021-08-02 0.151220 1.155111 0.077040 6 2 1.0

2021-08-03 -0.239416 0.997688 -1.006559 6 3 6.0

2021-08-04 -0.640489 0.219262 0.606844 6 4 6.0

Operations#

Stats#

Operations in general exclude missing data.

Performing a descriptive statistic:

In [78]: df.mean()

Out[78]:

A -0.162912

B 0.410623

C 0.098732

D 6.000000

F 3.500000

dtype: float64

Same operation on the other axis:

In [79]: df.mean(1)

Out[79]:

2021-08-01 1.289759

2021-08-02 1.876674

2021-08-03 1.750343

2021-08-04 2.037123

2021-08-05 2.476058

2021-08-06 2.385776

Freq: D, dtype: float64

Operating with objects that have different dimensionality and need alignment. In addition, pandas automatically broadcasts along the specified dimension.

In [80]: s = pd.Series([1, 3, 5, np.nan, 6, 8], index=dates).shift(2)

In [81]: s

Out[81]:

2021-08-01 NaN

2021-08-02 NaN

2021-08-03 1.0

2021-08-04 3.0

2021-08-05 5.0

2021-08-06 NaN

Freq: D, dtype: float64

In [82]: df.sub(s, axis="index")

Out[82]:

A B C D F

2021-08-01 NaN NaN NaN NaN NaN

2021-08-02 NaN NaN NaN NaN NaN

2021-08-03 -1.239416 -0.002312 -2.006559 5.0 2.0

2021-08-04 -3.640489 -2.780738 -2.393156 3.0 1.0

2021-08-05 -5.177799 -4.976977 -3.464933 1.0 0.0

2021-08-06 NaN NaN NaN NaN NaN

Apply#

Applying functions to the data:

In [83]: df.apply(np.cumsum)

Out[83]:

A B C D F

2021-08-01 0.000000 0.000000 -0.551206 6 1

2021-08-02 0.151220 1.155111 -0.474166 12 3

2021-08-03 -0.088196 2.152799 -1.480724 18 6

2021-08-04 -0.728685 2.372060 -0.873880 24 10

2021-08-05 -0.906484 2.395084 0.661187 30 15

2021-08-06 -0.977470 2.463741 0.592395 36 21

In [84]: df.apply(lambda x: x.max() - x.min())

Out[84]:

A 0.791708

B 1.155111

C 2.541626

D 0.000000

F 5.000000

dtype: float64

String Methods#

Series is equipped with a set of string processing methods in the str attribute that make it easy to operate on each element of the array, as in the code snippet below. Note that pattern-matching in str generally uses regular expressions by default (and in some cases always uses them).

In [85]: s = pd.Series(["A", "B", "C", np.nan, "CABA", "dog", "cat"])

In [86]: s.str.lower()

Out[86]:

0 a

1 b

2 c

3 NaN

4 caba

5 dog

6 cat

dtype: object

More string methods are provided like str.title, str.capitalize, str.upper.

Data types#

Several methods to convert data types are available:

In [87]: df = pd.DataFrame({

....: 'product': ['A', 'B', 'C', 'D'],

....: 'price': ['10', '20', '30', '40'],

....: 'sales': ['20', '-', '60', '-']

....: })

....:

In [88]: df

Out[88]:

product price sales

0 A 10 20

1 B 20 -

2 C 30 60

3 D 40 -

In [89]: df = df.astype({'price': 'int'})

But when we use astype to convert sales to integer, ValueError will occur because of invalid literal ‘-’. Fortunately, to_numeric solves the issue with parameter errors:

In [90]: df['sales'] = pd.to_numeric(df['sales'], errors='coerce')

In [91]: df.dtypes

Out[91]:

product object

price int64

sales float64

dtype: object

Merge#

Join#

We illustrate a more detailed example in a background of business. Suppose we have three dataframes:

In [92]: df1 = pd.DataFrame({

....: "id": np.arange(1, 4),

....: "price": [99, 105, 50]

....: })

....:

In [93]: df1

Out[93]:

id price

0 1 99

1 2 105

2 3 50

In [94]: df2 = pd.DataFrame({

....: "id": np.arange(3, 5),

....: "count": [12, 15]

....: })

....:

In [95]: df2

Out[95]:

id count

0 3 12

1 4 15

In [96]: df3 = pd.DataFrame({

....: "id": np.arange(1, 7),

....: "price": [99, 105, 50, 60, 30, 40],

....: "count": [10, 20, 12, 15, 100, 50],

....: "date": pd.date_range("20210801", periods=6)

....: })

....:

In [97]: df3

Out[97]:

id price count date

0 1 99 10 2021-08-01

1 2 105 20 2021-08-02

2 3 50 12 2021-08-03

3 4 60 15 2021-08-04

4 5 30 100 2021-08-05

5 6 40 50 2021-08-06

SQL style merges :

In [98]: pd.merge(df1, df2, on="id", how="left")

Out[98]:

id price count

0 1 99 NaN

1 2 105 NaN

2 3 50 12.0

In [99]: pd.merge(df1, df2, on="id", how="right")

Out[99]:

id price count

0 3 50.0 12

1 4 NaN 15

In [100]: pd.merge(df1, df2, on="id", how="outer")

Out[100]:

id price count

0 1 99.0 NaN

1 2 105.0 NaN

2 3 50.0 12.0

3 4 NaN 15.0

In [101]: pd.merge(df1, df2, on="id", how="inner")

Out[101]:

id price count

0 3 50 12

In [102]: df1.merge(df2, on="id", how="inner") # alternative syntax

Out[102]:

id price count

0 3 50 12

If we are to merge two dataframes with identical column names, directly using DataFrame.join will produce an ValueError said columns overlap but no suffix specified. One approach is to specify suffix for both dataframes with respect to columns that cause the conflict:

In [103]: df1.join(df2, lsuffix="_df1", rsuffix="_df2")

Out[103]:

id_df1 price id_df2 count

0 1 99 3.0 12.0

1 2 105 4.0 15.0

2 3 50 NaN NaN

Another approach is to set ahead index for both dataframes:

In [104]: df1.set_index("id").join(df2.set_index("id"))

Out[104]:

price count

id

1 99 NaN

2 105 NaN

3 50 12.0

Reset index while adding the old index as a new column:

In [105]: df1.set_index("id", inplace=True)

In [106]: df1

Out[106]:

price

id

1 99

2 105

3 50

In [107]: df1.reset_index(drop=True)

Out[107]:

price

0 99

1 105

2 50

In [108]: df1.reset_index(drop=False, inplace=True)

Concat#

pandas provides various facilities for easily combining together Series and DataFrame objects with various kinds of set logic for the indexes and relational algebra functionality in the case of join / merge-type operations.

Split into pieces and concatenate back:

In [109]: pieces = [df3[:2], df3[2:4], df3[4:]]

In [110]: pieces

Out[110]:

[ id price count date

0 1 99 10 2021-08-01

1 2 105 20 2021-08-02,

id price count date

2 3 50 12 2021-08-03

3 4 60 15 2021-08-04,

id price count date

4 5 30 100 2021-08-05

5 6 40 50 2021-08-06]

In [111]: pd.concat(pieces)

Out[111]:

id price count date

0 1 99 10 2021-08-01

1 2 105 20 2021-08-02

2 3 50 12 2021-08-03

3 4 60 15 2021-08-04

4 5 30 100 2021-08-05

5 6 40 50 2021-08-06

Note

Adding a column to a DataFrame is relatively fast. However, adding

a row requires a copy, and may be expensive. We recommend passing a

pre-built list of records to the DataFrame constructor instead

of building a DataFrame by iteratively appending records to it.

Extend this example to consolidate former knowledge. Determine whether to supplement products:

In [112]: df3["enough"] = np.where(df3["count"] >= 50, "yes", "no")

Add level of price to expensive or cheap:

df3.loc[(df3[“price”] > 50), “level”] = “high” df3.loc[(df3[“price”] < 51), “level”] = “low”

Use cut to categorize data by bins:

In [113]: df3['ageGroup'] = pd.cut(df3['price'], bins=[0, 40, 70, 100],

.....: labels=['low', 'mid', 'high'])

.....:

Split date into detailed unit and horizontally append to dataframe:

In [114]: df3.date = df3.date.astype(str) # avoid AttributeError

In [115]: subdate = pd.DataFrame((x.split('-') for x in df3.date), index=df3.index,

.....: columns=['year','month','day'])

.....:

In [116]: pd.concat([df3,subdate], axis=1, keys=["df3", "dates"])

Out[116]:

df3 dates

id price count date enough ageGroup year month day

0 1 99 10 2021-08-01 no high 2021 08 01

1 2 105 20 2021-08-02 no NaN 2021 08 02

2 3 50 12 2021-08-03 no mid 2021 08 03

3 4 60 15 2021-08-04 no mid 2021 08 04

4 5 30 100 2021-08-05 yes low 2021 08 05

5 6 40 50 2021-08-06 yes low 2021 08 06

Warning

Convert data to string dtype before conducting string manipulation, or AttributeError will occur. If we do not convert df3.date ahead, an error will raise as: AttributeError: ‘Timestamp’ object has no attribute ‘split’.

Grouping#

By “group by” we are referring to a process involving one or more of the following steps:

Splitting the data into groups based on some criteria

Applying a function to each group independently

Combining the results into a data structure

In [117]: df = pd.DataFrame({

.....: "A": ["foo", "bar", "foo", "bar", "foo", "bar", "foo", "foo"],

.....: "B": ["one", "one", "two", "three", "two", "two", "one", "three"],

.....: "C": np.random.randn(8),

.....: "D": np.random.randn(8),

.....: })

.....:

In [118]: df

Out[118]:

A B C D

0 foo one 0.066567 1.593288

1 bar one -0.858266 -0.002256

2 foo two 1.090977 0.556972

3 bar three -0.914114 -0.365743

4 foo two -0.518657 1.653892

5 bar two -1.252172 0.866307

6 foo one -0.179143 0.288360

7 foo three -0.780076 0.609170

Grouping and then applying the pandas.core.groupby.GroupBy.sum function to the resulting groups.

In [119]: df.groupby("A").sum(numeric_only=True)

Out[119]:

C D

A

bar -3.024551 0.498308

foo -0.320333 4.701682

Grouping by multiple columns forms a hierarchical index, and again we can apply the sum function.

In [120]: df.groupby(["A", "B"]).sum(numeric_only=True)

Out[120]:

C D

A B

bar one -0.858266 -0.002256

three -0.914114 -0.365743

two -1.252172 0.866307

foo one -0.112576 1.881648

three -0.780076 0.609170

two 0.572319 2.210864

Reshaping#

Stack#

In [121]: tuples = list(

.....: zip(

.....: *[

.....: ["bar", "bar", "baz", "baz", "foo", "foo", "qux", "qux"],

.....: ["one", "two", "one", "two", "one", "two", "one", "two"],

.....: ]

.....: )

.....: )

.....:

In [122]: tuples

Out[122]:

[('bar', 'one'),

('bar', 'two'),

('baz', 'one'),

('baz', 'two'),

('foo', 'one'),

('foo', 'two'),

('qux', 'one'),

('qux', 'two')]

In [123]: index = pd.MultiIndex.from_tuples(tuples, names=["first", "second"])

In [124]: index

Out[124]:

MultiIndex([('bar', 'one'),

('bar', 'two'),

('baz', 'one'),

('baz', 'two'),

('foo', 'one'),

('foo', 'two'),

('qux', 'one'),

('qux', 'two')],

names=['first', 'second'])

In [125]: df = pd.DataFrame(np.random.randn(8, 2), index=index, columns=["A", "B"])

In [126]: df

Out[126]:

A B

first second

bar one 0.942738 0.473426

two -0.481780 0.217468

baz one -0.935083 -2.314436

two -0.756820 0.134721

foo one 1.626621 -0.189159

two 0.163252 -2.167390

qux one 0.872637 -0.399940

two 1.590034 -1.410947

In [127]: df2 = df[:4]

In [128]: df2

Out[128]:

A B

first second

bar one 0.942738 0.473426

two -0.481780 0.217468

baz one -0.935083 -2.314436

two -0.756820 0.134721

The DataFrame.stack method compresses a level in the DataFrame’s columns.

In [129]: stacked = df2.stack()

In [130]: stacked

Out[130]:

first second

bar one A 0.942738

B 0.473426

two A -0.481780

B 0.217468

baz one A -0.935083

B -2.314436

two A -0.756820

B 0.134721

dtype: float64

With a stacked DataFrame or Series (having a MultiIndex as the index), the inverse operation of DataFrame.stack is DataFrame.unstack, which by default unstacks the last level:

In [131]: stacked.unstack() # unstack on the last level 2

Out[131]:

A B

first second

bar one 0.942738 0.473426

two -0.481780 0.217468

baz one -0.935083 -2.314436

two -0.756820 0.134721

In [132]: stacked.unstack(1)

Out[132]:

second one two

first

bar A 0.942738 -0.481780

B 0.473426 0.217468

baz A -0.935083 -0.756820

B -2.314436 0.134721

In [133]: stacked.unstack(0)

Out[133]:

first bar baz

second

one A 0.942738 -0.935083

B 0.473426 -2.314436

two A -0.481780 -0.756820

B 0.217468 0.134721

Pivot tables#

In [134]: df = pd.DataFrame(

.....: {

.....: "A": ["one", "one", "two", "three"] * 3,

.....: "B": ["A", "B", "C"] * 4,

.....: "C": ["foo", "foo", "foo", "bar", "bar", "bar"] * 2,

.....: "D": np.random.randn(12),

.....: "E": np.random.randn(12),

.....: }

.....: )

.....:

In [135]: df

Out[135]:

A B C D E

0 one A foo -0.322460 -1.200878

1 one B foo 2.964687 0.539208

2 two C foo 0.039817 -0.656564

3 three A bar -1.170292 -0.248316

4 one B bar 0.268392 -0.265521

.. ... .. ... ... ...

7 three B foo -1.341226 0.041183

8 one C foo 0.073688 -1.352641

9 one A bar 0.102749 -0.313030

10 two B bar -0.321690 0.616352

11 three C bar -0.551272 -0.504566

[12 rows x 5 columns]

We can produce pivot tables from this data very easily:

In [136]: pd.pivot_table(df, values="D", index=["A", "B"], columns=["C"])

Out[136]:

C bar foo

A B

one A 0.102749 -0.322460

B 0.268392 2.964687

C 0.273466 0.073688

three A -1.170292 NaN

B NaN -1.341226

C -0.551272 NaN

two A NaN -0.074330

B -0.321690 NaN

C NaN 0.039817

Time series#

pandas has simple, powerful, and efficient functionality for performing resampling operations during frequency conversion (e.g., converting secondly data into 5-minutely data). This is extremely common in, but not limited to, financial applications.

In [137]: rng = pd.date_range("1/1/2012", periods=100, freq="S")

In [138]: ts = pd.Series(np.random.randint(0, 500, len(rng)), index=rng)

In [139]: ts

Out[139]:

2012-01-01 00:00:00 182

2012-01-01 00:00:01 250

2012-01-01 00:00:02 357

2012-01-01 00:00:03 212

2012-01-01 00:00:04 192

...

2012-01-01 00:01:35 466

2012-01-01 00:01:36 462

2012-01-01 00:01:37 206

2012-01-01 00:01:38 465

2012-01-01 00:01:39 491

Freq: S, Length: 100, dtype: int64

In [140]: ts.resample("5Min").sum()

Out[140]:

2012-01-01 28000

Freq: 5T, dtype: int64

Time zone representation:

In [141]: rng = pd.date_range("3/6/2012 00:00", periods=5, freq="D")

In [142]: ts = pd.Series(np.random.randn(len(rng)), rng)

In [143]: ts

Out[143]:

2012-03-06 0.856107

2012-03-07 -0.822492

2012-03-08 -0.274845

2012-03-09 -1.456409

2012-03-10 0.765602

Freq: D, dtype: float64

In [144]: ts_utc = ts.tz_localize("UTC")

In [145]: ts_utc

Out[145]:

2012-03-06 00:00:00+00:00 0.856107

2012-03-07 00:00:00+00:00 -0.822492

2012-03-08 00:00:00+00:00 -0.274845

2012-03-09 00:00:00+00:00 -1.456409

2012-03-10 00:00:00+00:00 0.765602

Freq: D, dtype: float64

Converting to another time zone:

In [146]: ts_utc.tz_convert("US/Eastern")

Out[146]:

2012-03-05 19:00:00-05:00 0.856107

2012-03-06 19:00:00-05:00 -0.822492

2012-03-07 19:00:00-05:00 -0.274845

2012-03-08 19:00:00-05:00 -1.456409

2012-03-09 19:00:00-05:00 0.765602

Freq: D, dtype: float64

Converting between time span representations:

In [147]: rng = pd.date_range("1/1/2012", periods=5, freq="M")

In [148]: ts = pd.Series(np.random.randn(len(rng)), index=rng)

In [149]: ts

Out[149]:

2012-01-31 -0.454218

2012-02-29 0.945687

2012-03-31 -1.095913

2012-04-30 -0.868136

2012-05-31 -1.558698

Freq: M, dtype: float64

In [150]: ps = ts.to_period()

In [151]: ps

Out[151]:

2012-01 -0.454218

2012-02 0.945687

2012-03 -1.095913

2012-04 -0.868136

2012-05 -1.558698

Freq: M, dtype: float64

In [152]: ps.to_timestamp()

Out[152]:

2012-01-01 -0.454218

2012-02-01 0.945687

2012-03-01 -1.095913

2012-04-01 -0.868136

2012-05-01 -1.558698

Freq: MS, dtype: float64

Converting between period and timestamp enables some convenient arithmetic functions to be used. In the following example, we convert a quarterly frequency with year ending in November to 9am of the end of the month following the quarter end:

In [153]: prng = pd.period_range("1990Q1", "2000Q4", freq="Q-NOV")

In [154]: ts = pd.Series(np.random.randn(len(prng)), prng)

In [155]: ts.index = (prng.asfreq("M", "e") + 1).asfreq("H", "s") + 9

In [156]: ts.head()

Out[156]:

1990-03-01 09:00 0.498611

1990-06-01 09:00 2.248593

1990-09-01 09:00 -0.207991

1990-12-01 09:00 1.639503

1991-03-01 09:00 -0.182373

Freq: H, dtype: float64

Categoricals#

pandas can include categorical data in a DataFrame.

In [157]: df = pd.DataFrame(

.....: {

.....: "id": [1, 2, 3, 4, 5, 6],

.....: "raw_grade": ["a", "b", "b", "a", "a", "e"]

.....: }

.....: )

.....:

Convert the raw grades to a categorical data type.

In [158]: df["grade"] = df["raw_grade"].astype("category")

In [159]: df["grade"]

Out[159]:

0 a

1 b

2 b

3 a

4 a

5 e

Name: grade, dtype: category

Categories (3, object): ['a', 'b', 'e']

Rename the categories to more meaningful names (assigning to Series.cat.rename_categories is in place!).

In [160]: df["grade"].cat.rename_categories = ["very good", "good", "very bad"]

Reorder the categories and simultaneously add the missing categories (methods under Series.cat return a new Series by default).

In [161]: df["grade"] = df["grade"].cat.set_categories(

.....: ["very bad", "bad", "medium", "good", "very good"]

.....: )

.....:

In [162]: df["grade"]

Out[162]:

0 NaN

1 NaN

2 NaN

3 NaN

4 NaN

5 NaN

Name: grade, dtype: category

Categories (5, object): ['very bad', 'bad', 'medium', 'good', 'very good']

Sorting is per order in the categories, not lexical order.

In [163]: df.sort_values(by="grade")

Out[163]:

id raw_grade grade

0 1 a NaN

1 2 b NaN

2 3 b NaN

3 4 a NaN

4 5 a NaN

5 6 e NaN

Grouping by a categorical column also shows empty categories.

In [164]: df.groupby("grade").size()

Out[164]:

grade

very bad 0

bad 0

medium 0

good 0

very good 0

dtype: int64

Plotting#

We use the standard convention for referencing the matplotlib API:

In [165]: import matplotlib.pyplot as plt

In [166]: plt.close("all")

The plt.close method is used to close a figure window.

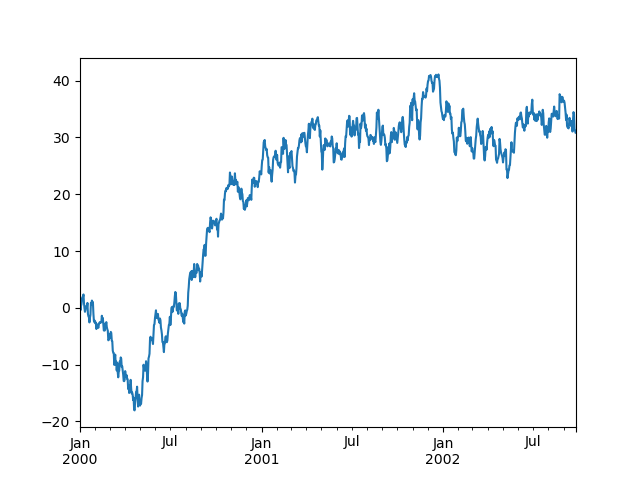

In [167]: ts = pd.Series(np.random.randn(1000), index=pd.date_range("1/1/2000", periods=1000))

In [168]: ts = ts.cumsum()

In [169]: ts.plot();

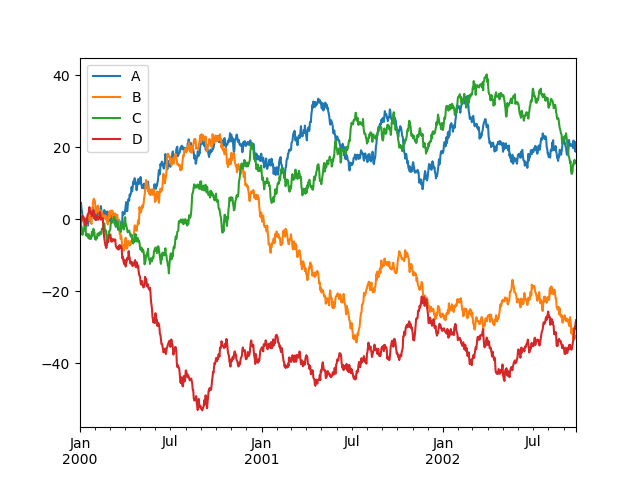

On a DataFrame, the DataFrame.plot method is a convenience to plot all of the columns with labels:

In [170]: df = pd.DataFrame(

.....: np.random.randn(1000, 4), index=ts.index, columns=["A", "B", "C", "D"]

.....: )

.....:

In [171]: df = df.cumsum()

In [172]: plt.figure();

In [173]: df.plot();

In [174]: plt.legend(loc='best');

IO Management#

CSV#

Writing to a csv file.

In [175]: df.to_csv("foo.csv")

Reading from a csv file.

In [176]: pd.read_csv("foo.csv")

Out[176]:

Unnamed: 0 A B C D

0 2000-01-01 4.293040 -0.263270 -0.449204 -0.107300

1 2000-01-02 3.683266 0.697799 -0.522885 -0.617935

2 2000-01-03 3.394994 0.889145 -0.891287 -1.544124

3 2000-01-04 2.585954 -0.395924 0.029842 -3.537277

4 2000-01-05 2.372088 -0.382483 -0.185600 -2.740550

.. ... ... ... ... ...

995 2002-09-22 21.785727 -37.691960 17.134546 -28.607807

996 2002-09-23 20.752620 -37.406264 16.697785 -29.420493

997 2002-09-24 21.213086 -38.618105 16.832882 -31.164423

998 2002-09-25 18.640338 -38.324714 16.479689 -33.188935

999 2002-09-26 17.734826 -38.764208 17.033938 -31.751839

[1000 rows x 5 columns]

Excel#

Reading and writing to MS Excel.

Writing to an excel file.

In [177]: df.to_excel("foo.xlsx", sheet_name="Sheet1")

Reading from an excel file.

In [178]: pd.read_excel("foo.xlsx", "Sheet1", index_col=None, na_values=["NA"])

Out[178]:

Unnamed: 0 A B C D

0 2000-01-01 4.293040 -0.263270 -0.449204 -0.107300

1 2000-01-02 3.683266 0.697799 -0.522885 -0.617935

2 2000-01-03 3.394994 0.889145 -0.891287 -1.544124

3 2000-01-04 2.585954 -0.395924 0.029842 -3.537277

4 2000-01-05 2.372088 -0.382483 -0.185600 -2.740550

.. ... ... ... ... ...

995 2002-09-22 21.785727 -37.691960 17.134546 -28.607807

996 2002-09-23 20.752620 -37.406264 16.697785 -29.420493

997 2002-09-24 21.213086 -38.618105 16.832882 -31.164423

998 2002-09-25 18.640338 -38.324714 16.479689 -33.188935

999 2002-09-26 17.734826 -38.764208 17.033938 -31.751839

[1000 rows x 5 columns]

Composition#

To compose one dataframe from multiple files, use glob library to get paths of files with random order and concatenate vertically and horizontally.

from glob import glob

files = sorted(glob("data/prefix_*.csv"))

pd.concat((pd.read_csv(file) for file in files), ignore_index=True)

pd.concat((pd.read_csv(file) for file in files), axis=1)

Miscellaneous#

Trim white spaces for object-dtype element.

for i in df: # traverse each column

if pd.api.types.is_object_dtype(df[i]): # if dtype is object

df[i] = df[i].str.strip() # remove white space

Copy from applications like Excel and paste through pandas as a DataFrame.

pd.read_clipboard()

Data wrangling with pandas can be referenced through an official cheatsheet.